India is rapidly emerging as a key player in the global AI landscape, leveraging technology to transform industries like healthcare, smart cities, education, and law. As a dynamic economy, India is increasingly using AI to tackle complex problems and create innovative solutions.

Global AI leaders like OpenAI, Google, and Meta are driving the growth of generative AI with ground-breaking services such as ChatGPT, Gemini, and LLaMA. While AI offers vast potential, concerns over misuse, bias, privacy, and ethics have raised the need for strong regulatory frameworks to address these challenges.

In India, policymakers and stakeholders are working to develop regulations that balance innovation with risk mitigation. While AI regulations in India are still evolving, they aim to address issues such as data protection, algorithmic transparency, and accountability. However, challenges remain in creating an adaptable and effective framework amidst rapid technological advancements.

As India navigates these developments, it must consider the broader implications of AI on employment, privacy, security, and societal equity. Ensuring AI is developed and deployed responsibly is essential to maximizing its benefits while minimizing risks. This article explores India’s current AI regulations and the actions that companies and entrepreneurs need to take to be compliant with the current AI regulations.

CURRENT STATE OF AI REGULATIONS IN INDIA

Currently, India does not have a dedicated regulatory framework that focuses solely on Artificial Intelligence. However, the Indian Government has taken steps to ensure that AI development is ethical and protects legal interests. This includes applying existing legal provisions for governing AI and introducing initiatives focused on data privacy, data security and consumer protection.

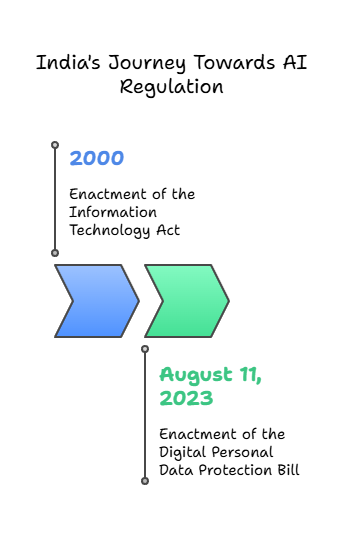

The Information Technology Act, 2000 (IT Act) serves as the primary legal foundation for regulating electronic transactions, cybercrimes and online safety in India. While it was initially drafted to address the growing use of the internet and digital technologies, the act provides a broad framework that is relevant to AI related activities. It includes provisions related to cybersecurity and data protection, offering a starting point for regulating AI systems, particularly in cases involving electronic transactions and digital infrastructure. Some of the relevant provisions in the IT Act are as follows –

- Section 43A – This section addresses the handling of sensitive personal data or information by corporate bodies. It enables compensation for data privacy breaches resulting from negligent handling of sensitive personal data. AI systems processing user data must comply with this provision to avoid legal repercussions.

- Section 65 – This section addresses tampering with document stored within a computer system. As per this section, penalties for tampering results in imprisonment for up to 3 years, a fine of Rs. 2 Lakhs or both.

- Section 66C – This section addresses identity theft and provides for penalties for such an offence. Penalties include imprisonment for 3 years, a fine of Rs. 1 Lakh or both.

- Section 66D – This section deals provides for the punishment for cheating by personation by using computer resources. This section is very important and relevant to deal with AI personation and issues relating to deepfakes and other AI generated fraudulent content.

- Section 67 – This section prohibits publishing or transmitting obscene materials in electronic format. AI systems that generate inappropriate or harmful content which results in their first conviction shall be punished with imprisonment of either description for a term which may extend to three years and with a fine which may extend to five lakh rupees. Punishment for the second or subsequent conviction results in imprisonment of either description for a term which may extend to five years and also with fine which may extend to ten lakh rupees.

- Section 69B – This section deals with the Power of the Central Government to monitor and collect traffic data or information through any computer resource for cyber security. This section provides power to the Central government to enhance cyber security.

On 11th August, 2023, the President of India formally enacted The Digital Personal Data Protection Bill, 2023 (Data Protection Rules) following its approval from both the houses of the Parliament. The law is still awaiting implementation, which is expected to occur in a phased manner. This delay is due to the need for establishing the Data Protection Board of India and framing subordinate rules to provide procedural guidance. It is expected to play a pivotal role in AI regulation by focusing specifically on the protection of personal data. As AI systems rely heavily on vast datasets for machine learning and predictive analytics, ensuring that AI models are developed in a manner that protects individual privacy, has become a central concern.

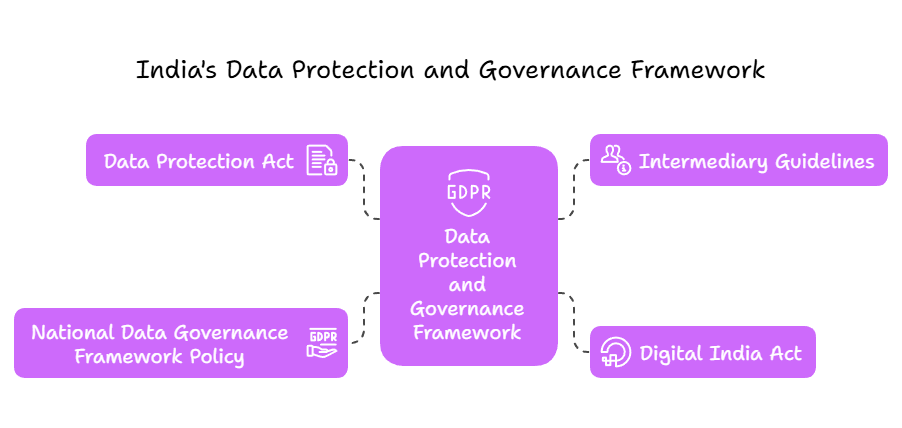

The Data Protection Act mandates organizations to handle personal data responsibly, with strict guidelines on consent, data storage, and security (Sections 4-6). It outlines the grounds for processing personal data, the notice to be given to data subjects about the data being collected, its purpose, and the consent process. Section 8 establishes the obligations of data fiduciaries to maintain data accuracy, ensure security, and delete data once its purpose is fulfilled.

Sections 11-17 grant data principals the right to access, correct, and erase their personal data, including obtaining details about the data being processed, the entities involved, and the data shared.

Additionally, the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, were introduced in India to regulate intermediaries such as social media platforms and digital media outlets. These guidelines apply to all intermediaries including significant social media intermediaries (SSMIs).

These rules impose due diligence obligations on intermediaries (Rule 4), requiring them to publish privacy policies and user agreements. They also set user responsibilities, prohibiting the sharing of harmful content, including defamatory or misleading materials. These rules also regulate content from online publishers, ensuring accessibility, transparency and privacy.

In March, 2023, Mr. Rajeev Chandrasekhar, India’s Ministry of State for the Ministry of Electronics and Information Technology (MEITY) announced the proposed Digital India Act (DI Act) which aims to replace the Information Technology Act 2000, by providing a modern legal framework for India’s evolving digital ecosystem. Objectives of this DI Act will include creating rules consistent with the changing technologies, offering accessible adjudication for online offenses, delivering timely remedies, safeguarding users against cyber threats, and making internet activities more accountable. Key features of this proposed DI Act include regulating new technologies like 5G and blockchain, aligning with the related laws, reviewing the safe harbour principle, requiring strict KYC wearable devices, and holding intermediaries accountable for content violation.

Lastly, the Draft National Data Governance Framework Policy (NDGFP) which has been released by the Ministry of Electronics and Information Technology (MeitY) for public consultation in May 2022, is introduced by the Indian government and aims to create a comprehensive framework for data handling and governance. It seeks to modernize and standardize the government’s data collection and management processes. The policy addresses challenges in data management, privacy and digital economy growth, and is currently under finalization.

AI RELATED ADVISORIES BY THE GOVERNMENT OF INDIA

Recognizing the rapid growth of AI technologies, the Ministry of Electronics and Information Technology (MeitY) issued guidelines on March 1, 2024[1], to regulate unreliable AI models, generative AI, and large language models (LLMs). However, due to concerns from the Indian start-up industry, MeitY revised the advisory on March 15, 2024. The updated advisory removed the requirement for government permission for under-tested or unreliable AI models. Instead, it directs intermediaries to label these models to inform users about their potential fallibility or unreliability.

These advisories outline critical directives –

- Bias and discrimination – AI models must avoid bias and discrimination, ensuring that they do not undermine electoral processes or public trust. Platforms should prevent any bias, discrimination or threats to the integrity of the electoral process through the use of AI models or algorithms.

- Under tested AI models – The advisories addressed the deployment of under tested AI models. The initial advisory required these models to obtain explicit permission from MeitY before deployment along with cautionary statements highlighting the potential inaccuracies. The revised advisory removed the need for explicit permission but emphasized labelling these models to inform users of potential fallibility.

- Labelling AI Generated content – AI generated media, including text, audio and video must be labelled with metadata or unique identifiers, especially to combat deepfakes and misinformation. The advisory necessitates identifying synthetically created content across various formats, advising platforms to employ labels, unique identifiers or metadata to ensure transparency. Furthermore, the advisory mandates the disclosure of software details and tracing the first originator of such synthetically created content.

STRATEGIC DOCUMENTS ON AI

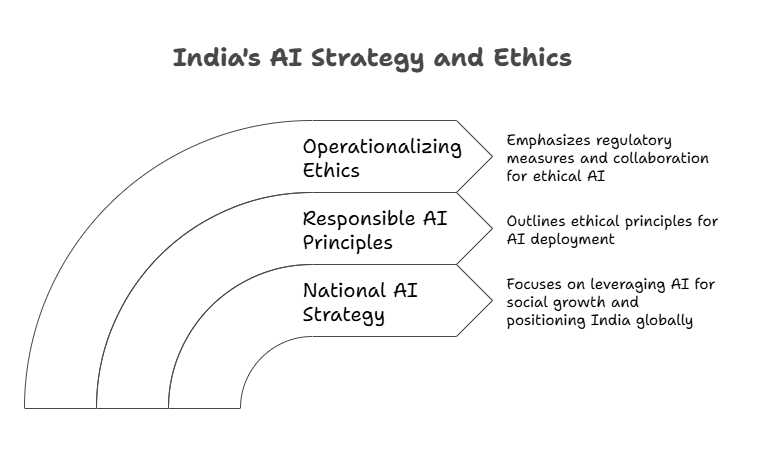

- National Strategy for Artificial Intelligence (2018): NITI Aayog has created a strategy that is adapted to India’s unique needs and aspirations, to leverage AI for social and inclusive growth. This includes exploratory proof-of-concept AI projects, crafting a national strategy for building a vibrant AI ecosystem, and collaboration with experts. The strategy focuses on how India can leverage AI to ensure social and inclusive growth, and aims to position India among global leaders on the AI map with a brand of #AIforAll.

- Responsible AI: A strategic Approach Document (2021): NITI Aayog published an approach document for Responsible AI, building on the National Strategy for Artificial Intelligence. The document examined the potential risks, legislative practices, and technological approaches to managing them. It aimed to establish broad ethical principles for the design, development, and deployment of AI in India. The document is divided into two parts: the first outlines seven key principles for responsible AI, including safety, equality, inclusivity, privacy, transparency, accountability, and alignment with human values. These principles aim to ensure ethical AI deployment in India. The second part focusses on operationalizing these principles through regulatory measures and encouraging ethics. It emphasizes collaboration between government, private sector, and research institutions to balance AI benefits and risks. These considerations are divided into considerations needed by the system and the societal considerations. System considerations deal with principles behind decision making, ensuring rightful inclusions of beneficiaries, and accountability of AI decisions. Societal considerations focus on the impact of automation on job creation and employment.

ACTION POINT FOR COMPANIES

As India continues to regulate and share its AI ecosystem, companies involved in AI development and deployment must take several key actions to ensure compliance with current laws, advisories, and guidelines.

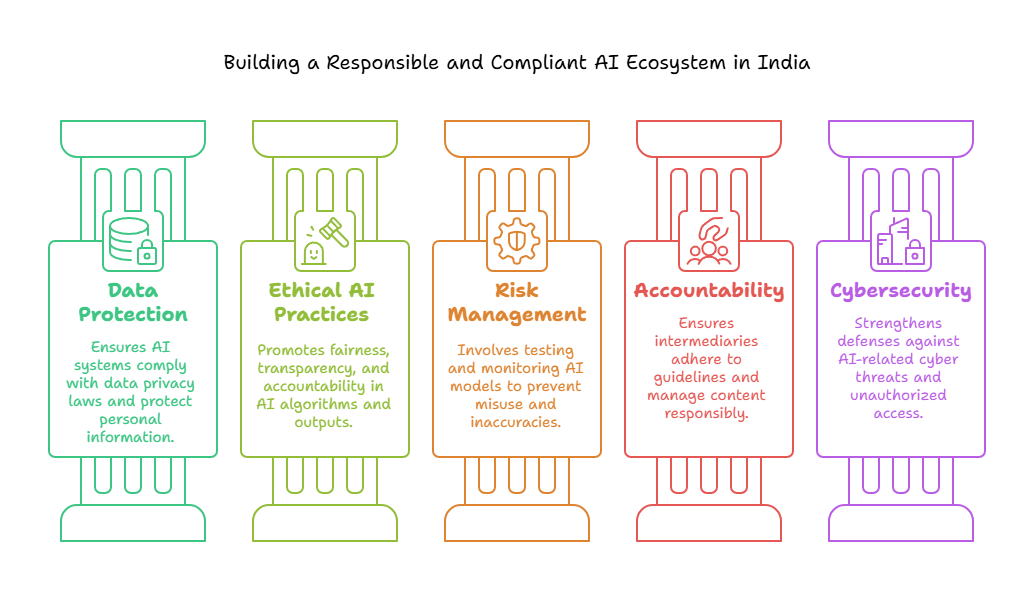

- Comply with Data Protection Laws

- Ensure that AI systems processing personal data comply with the Digital Personal Data Protection Act, particularly in obtaining proper consent, ensuring data security, and allowing individuals the right to access, correct and erase their data.

- Implement security measures to protect personal data and maintain its accuracy.

- Implement ethical AI practice

- Companies should tests AI algorithms for fairness and ensure that they do no perpetuate discriminatory outcomes.

- Companies must label AI-generated content (text, audio, video) to prevent misinformation, deepfakes, and malicious use. Implement metadata and unique identifiers for content to enhance transparency and traceability.

- Manage risks from AI misuse and unreliable models

- As per MeitY’s advisories, companies must ensure that AI models are thoroughly tested before deployment. For under-tested or unreliable models, provide clear labels and inform users about potential inaccuracies.

- Continuously monitor the performance of AI models post-deployment to identify any discrepancies or issues that could lead to harm or misinformation.

- Ensure Accountability of intermediaries

- As per the Intermediary Guidelines and Digital Media Ethics Code, ensure that all intermediaries (social media platforms, digital media outlets) comply with due diligence requirements, including publishing privacy policies and user agreements

- Implement systems to hold intermediaries accountable for harmful, misleading, or defamatory content through proactive moderation, reporting mechanisms, and quick responses.

- Implement cybersecurity measures

- Strengthen cybersecurity to prevent breaches and unauthorized access, following best practices for AI systems. Use AI tools to detect and mitigate cybersecurity threats, including AI-driven risks like deepfakes and phishing.

- Facilitate Digital Literacy and Workforce Development

- Companies should invest in upskilling the workforce in AI and data science through training programs and certifications. Promote AI literacy by educating employees and stakeholders on ethical AI, privacy regulations, and its societal impact.

- Following specific guidelines for generative AI Models

- Companies deploying generative AI models, especially in content creation, should label AI-generated text, images, and videos to prevent misuse in areas such as deepfakes, fake news, or electoral interference and provide clear information to users about the limitations, capabilities and potential risks of using generative AI models.

- Foster Collaboration with startups and Academia

- Collaborate with startups, academia, and government to promote responsible AI development. Invest in research and development to drive innovation while ensuring ethical practices and compliance with emerging laws.

CONCLUSION

India’s AI regulatory landscape is still in its formative stages, and while progress has been made, the journey ahead requires bold steps to address the complexities of this transformative technology. India’s focus on ethical AI, data privacy, and transparency is crucial, but the country must also prioritize addressing the gaps in education, infrastructure, and talent development to fully unlock the potential of AI. Furthermore, there is a need for a more dynamic, flexible regulatory approach that can keep pace with rapid technological advancements. As India continues to embrace AI, it should strive to create an ecosystem that not only promotes innovation but also ensures fairness, inclusivity, and accountability. With strategic investments, cross-sector collaboration, and a commitment to both global and local challenges, India can become a leader in shaping AI’s future—one that benefits not only the nation but the global community as well.

[1] eNO. 2(4)/2023-CyberLaws-3, Government of India, Ministry of electronics and information technology, cyber law and data governance group, dated 15th March 2024, available at – https://www.meity.gov.in/writereaddata/files/Advisory%2015March%202024.pdf